Fang - A Language With Bite

For the past couple weeks, I’ve been attempting the daunting task of designing and implementing a programming language.

I’ve wanted to do this for a number of years, especially since Bob Nystrom released Crafting Interpreters a little while ago, and now felt like a good time to try.

I had a pretty terrible time of the Compilers course I took at univeristy and I kind of want to prove to myself that I have the ability to make something that actually works, without caveat.

(This urge actually hit me after a work conversation, where we narrowly avoided having to implement our own interpreted expression language. So I guess I’ll have to do it myself XD)

Fang

So, my programming language is named “Fang”. Fang is designed to be a pretty minimal language, which I want to use to compile software (games and applications) for very low powered, retro hardware.

The primary platforms I want to target are the NES (6502 CPU) and Game Boy (Z80-like hardware), although for development reasons I’m also targeting AArch64 (so I can run programs natively on my macbook), and I’ll probably support an x86_64 backend as well so I can run it on my lower-end project machine.

It could just-as-easily be implemented to run in a bytecode VM on slightly more powerful hardware too.

Fang is a (usually) compilered, imperative and procedural language with a static type system. I think the type system could be considered “weak” because it provides some ways to bypass the type-system, including raw pointer access. There isn’t a type conversion system implemented yet.

It only supports a few datatypes:

- Integers (signed, unsigned, 8-bit and 16-bit wide)

- Pointers (including function pointers)

- Arrays of a fixed type

- Composite record types (like “structs” in C)

- I’ve been toying with a “String” data type, which is structured like Pascal-strings: a length, followed by a character array, no null-termination. I’m not sure how much string manipulation we can support, but these would still be helpful at compile time for game text.

Fang doesn’t use heap-allocation for memory. Apart from globals which are allocated memory during compile time, everything is stack-allocated. This is because the platforms Fang is targeted at don’t have huge amounts of memory and so can’t really provide a useful “heap”.

In theory, someone could extend it with a heap allocator in the future though, it’s just not baked into the language.

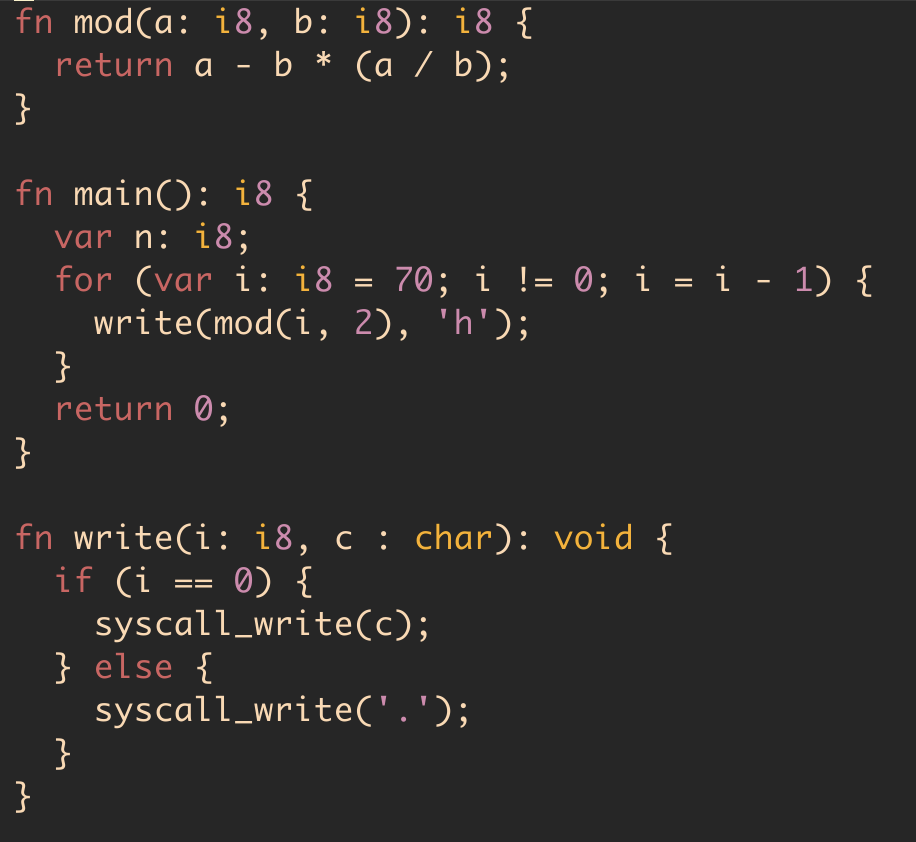

Here’s a really terrible program:

and it outputs:

Offscreen is syscall_write(c: char), which then runs a hand-crafted piece of assembly code to

run a “write” syscall to print to standard out.

Being able to emit raw assembly seemed useful and important for allowing the developer to write optimised code in certain situations. It looks like it’ll be helpful for implementing a standard library also.

Implementation Progress

The compiler I have so far is written in C and outputs ARM64 assembly, which is then assembled by the toolchain into an executable.

It fully parses and type-checks the input program, and determines if it’s valid. Sadly the error messages when it isn’t valid aren’t currently all there, or very good. I really need to improve those.

After that, it starts generating assembly. For the moment, this code generation is really naive, so if you try to compile complex expressions it will declare it has run out of registers and abort.

The naive approach also means that it produces a lot of redundant operations, because each statement’s code is generated in isolation.

This is acceptable during development, but eventually I’ll need to implement some optimisation passes as well as a mechanism for efficient register-spilling so that the program code can be as space and time-efficient as possible.

Low-powered hardware doesn’t have a lot of registers and with lower processing speeds, we can’t afford a lot of memory operations to pull and store data constantly.

I’ve not got a good handle yet on how exactly that kind of data flow optimisation is going to work, but I’m going to try and read a bunch of resources around compiler implementation to figure it out.

Absorbing the various bits of research and guidance around compilers is quite challenging, as a lot of it is written with academic style and vocabulary, and focused on research. This was one of the barriers I found to my comprehension of this at university, but I am doing my best to grok it.

Find out more

I’m posting bite-sized updates on Mastodon. I use the hashtag #fanglang to group the posts together. Just today, I managed to sort out a bunch of stack frame issues, so I can call functions within functions, passing parameters around with nested scopes. Pretty cool!